HashiCorp Boundary

Last Updated: June 11, 2022

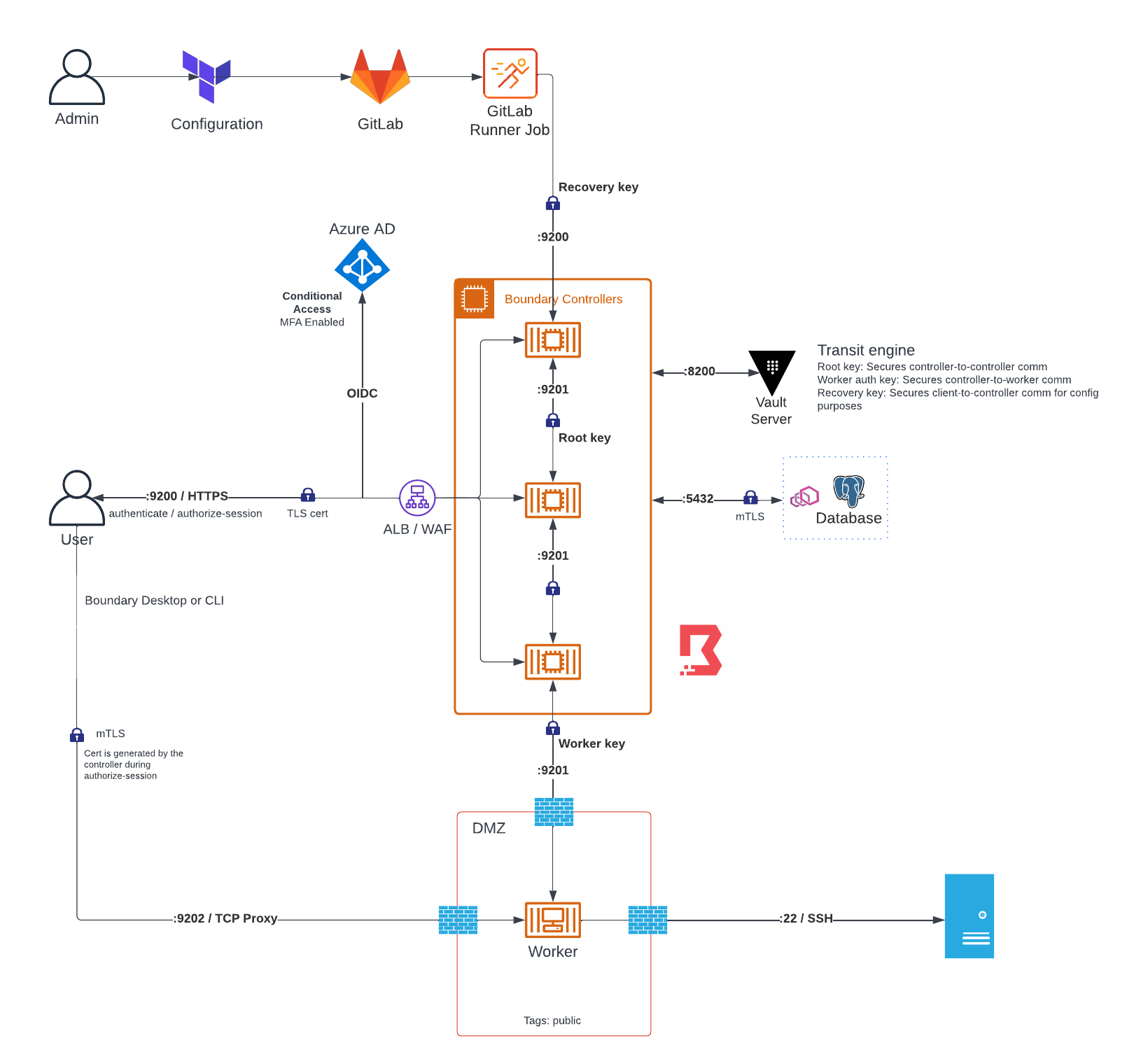

Boundary architecture

The Boundary system is composed of three components: controllers, workers, and the database. The worker node runs separately from the controllers and should be restricted to what it can access. This could be done through a network firewall or AWS security group.

Multi-factor authentication is controlled through a Conditional Access rule on Azure AD.

A web application firewall / load balancer can be placed in front of the Boundary controller for extra protection, as well as to distribute the load across controllers.

A user initially accesses Boundary through the CLI or Desktop app. This communicates with the Boundary controllers through the API service port (default 9200) over TLS.

Once the user is authenticated with the controller (through Azure AD / OIDC), they can connect to a Target.

The authorize-session command generates a certificate and private key for the client to use for connecting to the worker (mTLS).

Multiple workers can be added to the system. Workers can be tagged to specify what systems they can access.

The communication between various components of the system are secured by a KMS Key that is stored in Vault.

Note that this key could also be stored in Azure Key Vault or AWS KMS.

Different keys handle different functions.

The root key secures controller communication and used to encrypt values in the database.

The worker-auth key authenticates a worker to a controller.

Learn more about the security here.

Boundary can be used to proxy any TCP connection, but the CLI has the ability to invoke several native clients such as HTTP, SSH, Postgres psql, or RDP mstsc.

In addition, Boundary also has the ability to broker dynamic credentials from Vault. For example, a database admin can generate connection information for a Postgres database on demand. See more here.

Boundary can be monitored and audited through a Prometheus-compatible /metrics endpoint and using the events file sink (for ingest into Splunk or similar).

Note that Boundary does not need multiple controllers; it does not use the raft consensus algorithm like other Hashicorp products.

Boundary setup

KMS

In this example we are using Vault to provide the key management service. Use the following terraform code to create the resources.

resource "vault_mount" "boundary_transit" {

path = "boundary_kms"

type = "transit"

description = "Transit KMS for Boundary"

default_lease_ttl_seconds = 3600

max_lease_ttl_seconds = 86400

}

resource "vault_transit_secret_backend_key" "boundary_root_key" {

backend = vault_mount.boundary_transit.path

name = "boundary_root_key"

}

resource "vault_transit_secret_backend_key" "boundary_global_worker_auth" {

backend = vault_mount.boundary_transit.path

name = "boundary_global_worker_auth"

}

resource "vault_transit_secret_backend_key" "boundary_global_recovery" {

backend = vault_mount.boundary_transit.path

name = "boundary_global_recovery"

}

resource "vault_policy" "boundary_policy" {

name = "boundary"

policy = <<EOT

path "${vault_mount.boundary_transit.path}/encrypt/*" {

capabilities = ["update"]

}

path "${vault_mount.boundary_transit.path}/decrypt/*" {

capabilities = ["update"]

}

EOT

}

Nomad Job

Below is an example of the nomad job. It uses Consul Connect to secure controller <-> database communication, and Vault to store database secrets and the transit engine to encrypt Boundary communication.

It assumes you already Vault and Nomad/Vault integration set up. The database secrets should be created in Vault at kv-nomad-apps/data/boundary/db.

job "boundary" {

type = "service"

reschedule {

delay = "30s"

delay_function = "constant"

unlimited = true

}

update {

max_parallel = 1

health_check = "checks"

min_healthy_time = "10s"

healthy_deadline = "5m"

progress_deadline = "10m"

auto_revert = true

canary = 0

stagger = "30s"

}

vault {

policies = ["nomad-job", "boundary"]

}

group "boundary-controller" {

count = 3

constraint {

operator = "distinct_hosts"

value = "true"

}

restart {

interval = "10m"

attempts = 2

delay = "15s"

mode = "fail"

}

network {

mode = "bridge"

port "api" {

static = 9200

to = 9200

}

port "cluster" {

static = 9201

to = 9201

}

}

service {

name = "boundary-controller"

port = "api"

tags = [

"traefik.enable=true",

"traefik.http.routers.boundary.tls=true",

"traefik.http.routers.boundary.tls.domains[0].main=boundary.techstormpc.net",

]

connect {

sidecar_service {

tags = ["traefik.enable=false"]

proxy {

upstreams {

destination_name = "boundary-database"

local_bind_port = 5432

}

}

}

}

}

task "boundary-controller" {

driver = "docker"

config {

image = "hashicorp/boundary:0.8"

volumes = [

"local/boundary.hcl:/boundary/config.hcl"

]

ports = ["api", "cluster"]

cap_add = ["ipc_lock"]

}

template {

data = <<EOF

controller {

# This name attr must be unique across all controller instances if running in HA mode

name = "{{env "NOMAD_ALLOC_ID"}}"

public_cluster_addr = "{{ env "NOMAD_IP_cluster" }}"

{{with secret "kv-nomad-apps/data/boundary/db"}}

database {

url = "postgresql://{{.Data.data.user}}:{{.Data.data.pass}}@127.0.0.1:5432/boundary?sslmode=disable"

}

{{end}}

}

# API listener configuration block

listener "tcp" {

address = "0.0.0.0:9200"

purpose = "api"

cors_enabled = true

cors_allowed_origins = ["https://boundary.techstormpc.net", "serve://boundary"]

}

# Data-plane listener configuration block (used for worker coordination)

listener "tcp" {

address = "0.0.0.0:9201"

purpose = "cluster"

}

# Root KMS configuration block: this is the root key for Boundary

kms "transit" {

purpose = "root"

address = "https://vault.service.consul:8200"

disable_renewal = "true"

key_name = "boundary_root_key"

mount_path = "boundary_kms/"

}

# Worker authorization KMS

kms "transit" {

purpose = "worker-auth"

address = "https://vault.service.consul:8200"

disable_renewal = "true"

key_name = "boundary_global_worker_auth"

mount_path = "boundary_kms/"

}

# Recovery KMS block: configures the recovery key for Boundary

kms "transit" {

purpose = "recovery"

address = "https://vault.service.consul:8200"

disable_renewal = "true"

key_name = "boundary_global_recovery"

mount_path = "boundary_kms/"

}

EOF

destination = "local/boundary.hcl"

}

resources {

cpu = 500

memory = 512

}

}

}

group "boundary-worker" {

count = 1

network {

mode = "bridge"

port "proxy" {

static = 9202

to = 9202

}

}

task "boundary-worker" {

driver = "docker"

config {

image = "hashicorp/boundary:0.8"

volumes = [

"local/boundary.hcl:/boundary/config.hcl",

]

ports = ["proxy"]

cap_add = ["ipc_lock"]

}

template {

data = <<EOF

# Proxy listener configuration block

listener "tcp" {

address = "0.0.0.0"

purpose = "proxy"

}

worker {

name = "{{ env "NOMAD_ALLOC_ID" }}"

description = "Worker on {{ env "attr.unique.hostname" }}"

public_addr = "{{ env "NOMAD_IP_proxy" }}"

controllers = [

{{ range service "boundary-controller" }}

"{{ .Address }}:9201",

{{ end }}

]

}

# Worker authorization KMS

kms "transit" {

purpose = "worker-auth"

address = "https://vault.service.consul:8200"

disable_renewal = "true"

key_name = "boundary_global_worker_auth"

mount_path = "boundary_kms/"

}

EOF

destination = "local/boundary.hcl"

}

resources {

cpu = 1000

memory = 512

}

}

}

group "boundary-db" {

count = 1

network {

mode = "bridge"

}

service {

name = "boundary-database"

port = 5432

connect {

sidecar_service {}

}

}

volume "boundary_db" {

type = "host"

source = "boundary_db"

}

task "postgres" {

driver = "docker"

volume_mount {

volume = "boundary_db"

destination = "/var/lib/postgresql/data"

}

config {

image = "postgres"

}

template {

data = <<EOT

{{ with secret "kv-nomad-apps/data/boundary/db" }}

POSTGRES_DB = "boundary"

POSTGRES_USER = "{{.Data.data.user}}"

POSTGRES_PASSWORD = "{{.Data.data.pass}}"

{{ end }}

EOT

destination = "config.env"

env = true

}

}

}

}

Database init

You can initialize the database with the following nomad batch job:

job "boundary-init" {

type = "batch"

vault {

policies = ["nomad-job", "boundary"]

}

group "boundary-init" {

network {

mode = "bridge"

}

service {

connect {

sidecar_service {

proxy {

upstreams {

destination_name = "boundary-database"

local_bind_port = 5432

}

}

}

}

}

task "boundary-init" {

driver = "docker"

config {

image = "hashicorp/boundary:0.8"

volumes = [

"local/boundary.hcl:/boundary/config.hcl"

]

args = [

"database", "init",

"-skip-auth-method-creation",

"-skip-host-resources-creation",

"-skip-scopes-creation",

"-skip-target-creation",

"-config", "/boundary/config.hcl"

]

cap_add = ["ipc_lock"]

}

template {

data = <<EOF

controller {

# This name attr must be unique across all controller instances if running in HA mode

name = "{{env "NOMAD_ALLOC_ID"}}"

public_cluster_addr = "{{ env "NOMAD_IP_cluster" }}"

{{with secret "kv-nomad-apps/data/boundary/db"}}

database {

url = "postgresql://{{.Data.data.user}}:{{.Data.data.pass}}@127.0.0.1:5432/boundary?sslmode=disable"

}

{{end}}

}

# Recovery KMS block: configures the recovery key for Boundary

kms "transit" {

purpose = "recovery"

address = "https://vault.service.consul:8200"

disable_renewal = "true"

key_name = "boundary_global_recovery"

mount_path = "boundary_kms/"

}

EOF

destination = "local/boundary.hcl"

}

}

}

}

Using Boundary

Connecting to a target

$env:BOUNDARY_ADDR="https://boundary.techstormpc.net"

$env:BOUNDARY_AUTH_METHOD_ID="<authMethodId>"

boundary authenticate oidc

boundary connect ssh -target-scope-name="Public" -target-name="Database"

Boundary support four subcommands: http, ssh, postgres, and rdp. If there is no built-in wrapper, you can use the -exec flag to wrap any TCP session to your client.

To pass additional arguments to the client, any arguments after -- at the end will be sent to the client.

The ssh command supports -style putty to launch in PuTTY, and -username to specify an alternative SSH username. However, I would recommend just using the built in Windows SSH client.

Administration

Connecting with recovery method

You can authenticate with the CLI using the recovery method if OIDC isn't working (or on initial setup). It gives you full admin.

The boundary config file requires:

kms "transit" {

purpose = "recovery"

address = "https://vault.service.consul:8200"

disable_renewal = "true"

key_name = "boundary_global_recovery"

mount_path = "boundary_kms/"

}

Note that the Vault token you provide needs a policy that can access /boundary_kms/<encrypt|decrypt>/boundary_global_recovery.

$env:VAULT_TOKEN="<SNIP>"

$env:BOUNDARY_RECOVERY_CONFIG="C:\Downloads\boundary.hcl

boundary accounts list -auth-method-id amoidc_piMpkeD64V